What is frame rate? And how will it change for next-gen consoles?

We know those next-gen consoles have got your frame rate all a-flutter

Frame rate might feel like a complicated technology, but it's key to making sure that your games don't stutter on screen. Technically speaking, a frame rate figure tells you how many fresh images of content are displayed per second on your TV or monitor. And it doesn’t just apply to games.

24 frames per second (or fps as it's more commonly written) is the standard for cinema, and most of Netflix content. 30 frames a second is the norm for TV broadcasts in the US.

Frame rate in games though is more interesting. As they do not deal solely in locked down pre-rendered content, the rate can – and often does – change from one fraction of a second to the next. Let’s take a closer look.

Frame Rate – The basics

Frame rate determines how smooth your games look. 30fps is the traditional console standard developers try to maintain, at a minimum, for a satisfying experience. But 60fps is considered ideal, and many competitive gamers consider this a minimum for titles that rely on fast player reactions – think online shooters like Call of Duty, Fortnite, Battlefield and so on, or MOBAs like League of Legends or DOTA.

A stutter can mean the difference between a winner’s PUBG chicken dinner and, well, not winning. Microwave meal anyone?

The upcoming Xbox Series X and PS5 bring frame rates to the attention of people who may not think about them much. These consoles claim to be able to play games at 120fps, for the kind of smoothness usually reserved for those willing to spend thousands on a high-end gaming PC.

This frame rate frequency is made possible by HDMI 2.1, the video connector used in the latest graphics cards and TVs, and the next-gen consoles. The older HDMI 2.0 standard, seen in the Xbox One X, can actually already handle up to 120fps at 4K resolution, but HDMI 2.1 raises the ceiling to 8K resolution. It is future-proof.

Sign up to the 12DOVE Newsletter

Weekly digests, tales from the communities you love, and more

Making frames

A new video socket does not suddenly mean the Xbox Series X will be able to play Halo Infinite at 120fps, though.

Think of the frame rate as the power of a console (or PC) divided by the complexity of the scene being rendered at that moment. This is why a game might occasionally look jerky when the view goes from an indoor scene to a wide-open outdoor one, with stacks of extra trees, buildings and smiling sentient mushrooms to draw. The amount of work to do suddenly jumps up, but the ability to do it does not, so the frame rate dips.

Frame rate is usually dependent on the power of a system’s graphics card, but some titles are unusually heavy on the CPU too. Civilization 6 is a good example, as the central processor is used to calculate the decisions all your AI opponents make, whether they are on-screen or not.

It is not always the case that a game will play as fast as the hardware allows, though. Many games, particularly console ones, are “locked” at a frame rate, usually 30fps or 60fps. This does not guarantee the rate will never go below that figure, just that it will not head above it. A locked frame rate leaves more spare system resources, from second to second, and this helps keep the rate consistent as in-game demand fluctuates.

PC gamers tend to be much more conscious of frame rates than the console crowd, because there you have real control. With a PC you can manually change the resolution, fiddle with the lighting effects used, the draw distance and any number of other parameters. There’s none of this on console (with just a few exceptions), because the developer has already optimised its game to work on your specific console, whether it is an original Xbox One or a PS4 Pro.

Just press the button and play: it’s one of the joys of console gaming (until you have a break from it and find 47 system updates you need to download before playing, anyway).

Framing control

Oddly enough, you often have more control over the settings that determine frame rate in a mobile game than one on console these days. And it’s all down to the level of variation in the hardware used to play any one game. On PC it’s almost limitless. RAM, storage speed, CPUs, GPUs, overclocking and cooling all have an effect. And thousands of different Android phones might be able to play the one game.

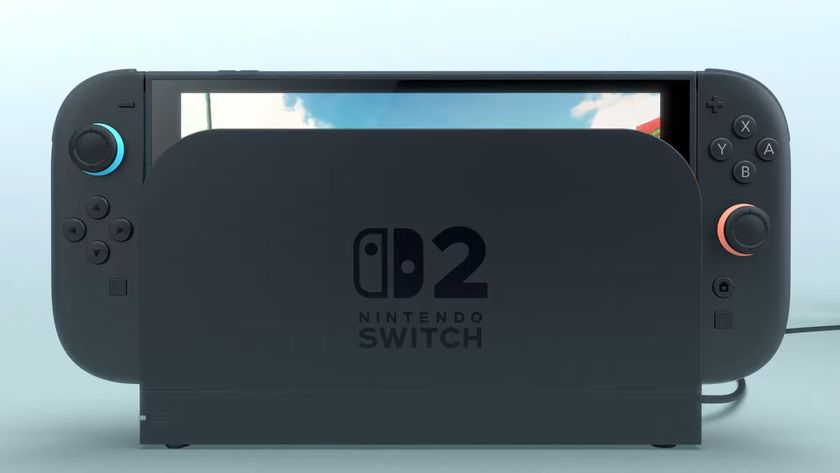

But there are only four significantly different Xbox One consoles, three types of PS4 and three kinds of Nintendo Switch.

Some of you may be surprised to hear lengths developers had to go, in order to make their games run well on console, even with carefully tailored optimisation. For example, Mirror’s Edge: Catalyst runs at 900p resolution on PS4, and 720p on Xbox One. Assassin’s Creed Syndicate runs at 900p on both vanilla versions of the current-gen systems. They are not even “Full HD”.

“Pro” versions of the consoles took off these limiters, for the most part. The Xbox One X can already play a lot of games at true 4K resolution, and doubles the frame rate of many to 60fps too.

Is next-gen ready for 120fps?

So if the Xbox Series X has twice the graphics power of the One X, 12 teraflops to six, why shouldn’t it be able to do the same and kick up the resolution to 8K, the frame rate to 120fps?

The Xbox Series X and PS5 mark the start of a new generation, which allows developers to work from a much deeper pool of resources. Games “Enhanced” for Xbox One X are still made, to a great extent, with the original Xbox One in mind as a reference point.

A generational reboot lets developers invest more in expensive (in processing terms) lighting effects like ray tracing, and maintaining fine texture quality deep into the draw distance. Just as the frame rate is a balancing act of power and the demands of the scene rendered, developers will, as ever, balance the benefits of better lighting, shadows, textures, and more detailed character models, with those of above-60fps frame rates and ultra-niche 8K resolution.

For now, at least, a 120fps frame rate is more likely to be the result of an Xbox Series X game also made for the Xbox One than a truly ambitious attempt to see what the next generation can really do. We already know this cross-over effect with happen too, not least because it makes financial sense. Developers need to cater to early adopters and the much wider audience of Xbox One and PS4 owners.

Cyberpunk 2077 is one of these titles. It will come to both Xbox One and Series X eventually. But if CD Projekt Red can’t do a good job of this generation-spanning, who will?

Hz and fps: frame rate and refresh rate

At this point we should also clear up some of the realities around frame rates and refresh rates. To fully appreciate the benefits of ultra-fast frame rates like 120fps, you also need a display with a fast refresh rate.

This stat, expressed as so many hertz (Hz), tells you how many times a second a screen’s image is fully refreshed. You do not have to pay all that much for very “fast” 144Hz or 240Hz gaming monitors because, unsurprisingly, the companies that make the things made this a priority a long time ago.

The same cannot be said about TVs. LG’s LG 55UM7610PLB is a TV you might consider if you want a large, smart-looking set that will not clean out your savings, but still supports 4K and HDR.

However, its refresh rate is 60Hz. While it has a TruMotion 100 mode that may suggest this can be boosted to 100Hz, this only refers to the TV adding interstitial frames in order to improve motion clarity. It cannot make full use of the 120Hz/120fps signal that should be possible with the next consoles.

You need to buy quite a high-end TV to really dig into the corners of the Xbox Series X’s and PS5’s capabilities. The LG C9 OLED TV is one of our current higher-end favourites. It has a 100/120Hz refresh rate, and also supports the Variable Refresh Rate feature added in HDMI 2.1.

But why is it a 100/120Hz TV, and (for those looking into buying a new TV) why are some others listed at 50/60Hz?

You’re most likely to see these “variable” figures in the UK and Europe. Back in the old days of analogue TV, and games that really were tied to their PAL/NTSC geographic territories, televisions’ refresh rates were synchronised to the frequency of the mains electricity in your house. The UK has 50Hz AC, the US 60Hz. But that so longer matters, as TVs no longer sync their refresh rate to the AC.

For more details on all the next-gen jargon, check out more of our next-gen explainers.

Andrew is a technology journalist with over 10 years of experience. Specializing particularly in mobile and audio tech, Andrew has written for numerous sites and publications, including Stuff, Wired, TrustedReviews, TechRadar, T3, and Wareable.

Helldivers 2 CEO says industry layoffs have seen "very little accountability" from executives who "let go of one third of the company because you made stupid decisions"

"Games that get 19% user score do not generally recover": Helldivers 2 CEO reflects on Arrowhead's "summer of pain" and No Man's Sky-inspired redemption arc